Mozilla announced:

There’s pretty broad agreement that HTTPS is the way forward for the web. In recent months, there have been statements from IETF, IAB (even the other IAB), W3C, and the US Government calling for universal use of encryption by Internet applications, which in the case of the web means HTTPS.

I’m on board with this development 100%. I say this as a web developer who has, and will face some uphill battles to bring everything into HTTPS land. It won’t happen immediately, but the long-term plan is 100% HTTPS . It’s not the easiest move for the internet, but it’s undoubtedly the right move for the internet.

A brief history

The lack of encryption on the internet is not to different from the weaknesses in email and SMTP that make spam so prolific. Once upon a time the internet was mainly a tool of academics, trust was implicit and ethics was paramount. Nobody thought security was of major importance. Everything was done in plain text for performance and easy debugging. That’s why you can use telnet to debug most older popular protocols.

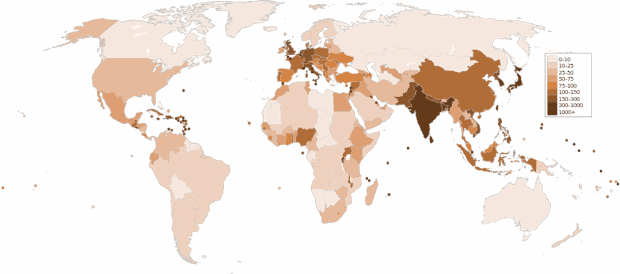

In 2015 the landscape has changed. Academic use of the internet is a small fraction of its traffic. Malicious traffic is a growing concern. Free sharing of information, the norm in the academic world is the exception in some of the places the internet reaches.

Protecting the user

Users deserve to be protected as much as technology will allow. Some folks claim “non-sensitive” data exist. I disagree with this as it’s objective and a matter of personal perspective. What’s sensitive to someone in a certain situation is not sensitive to others. Certain topics that are normal and safe to discuss in most of the world are not safe in others. Certain search queries are more sensitive than others (medical questions, sensitive business research). A web developer doesn’t have a good grasp of what is sensitive or not. It’s specific to the individual user. It’s not every network admin’s right to know if someone on their network browsed and/or purchased pregnancy tests or purchased a book on parenting children with disabilities on Amazon. The former may not go over well at a “free” conservative school in the United States for example. More than just credit card information is considered “sensitive data” in this case. Nobody should be so arrogant as to think they understand how every person on earth might come across their website.

Google and Yahoo took the first step to move search to HTTPS (Bing still seems to be using HTTP oddly enough). This is the obvious second step to protecting the world’s internet users.

Protecting the website’s integrity

Unfortunately you can no longer be certain a user sees a website as you intended it as a web developer. Sorry, but it doesn’t work that way. For years ISP’s have been testing the ability to do things like insert ads into webpages. As far as I’m aware in the U.S. there’s nothing explicitly prohibiting replacing ads. Even net neutrality rules seem limited to degrading or discriminating against certain traffic, not modifying payloads.

Unfortunately you can no longer be certain a user sees a website as you intended it as a web developer. Sorry, but it doesn’t work that way. For years ISP’s have been testing the ability to do things like insert ads into webpages. As far as I’m aware in the U.S. there’s nothing explicitly prohibiting replacing ads. Even net neutrality rules seem limited to degrading or discriminating against certain traffic, not modifying payloads.

I’m convinced the next iteration of the great firewall will not explicitly block content, but censor it. It will be harder to detect than just being denied access to a website. The ability to do large-scale processing like this is becoming more practical. Just remove the offending block of text or image. Citizens of oppressed nations will possibly not notice a thing.

There’s also been attempts to “optimize” images and video. Again even net-neutrality is not entirely clear assuming this isn’t targeted to competitors for example.

But TLS isn’t perfect!

True, but let’s be honest, it’s 8,675,309 times better than using nothing. CA’s are a vulnerability, they are a bottleneck, and a potential target for governments looking to control information. But browsers and OS’s allow you to manage certificates. The ability to stop trusting CA’s exists. Technology will improve over time. I don’t expect us to be still using TLS 1.1 and 1.2 in 2025. Hopefully substantial improvements get made over time. This argument is akin to not buying a computer because there will be a faster one next year. It’s the best option today, and we can replace it with better methods when available.

SSL Certificates are expensive!

First of all, domain validation certificates can be found for as little as $10. Secondly, I fully expect these prices to drop as demand increases. Domain verification certificates have virtually no cost as it’s all automated. The cheaper options will experience substantial growth as demand grows. There’s no limit in “supply” except computing power to generate them. A pricing war is inevitable. It would happen even faster if someone like Google bought a large CA and dropped prices to rock bottom. Certificates will get way cheaper before it’s essential. $10 is the early adopter fee.

But XYZ doesn’t support HTTPS!

True, not everyone is supporting it yet. That will change. It’s also true some (like CDN’s) are still charging insane prices for HTTPS. It’s not practical for everyone to switch today. Or this year. But that will change as well as demand increases. Encryption overhead is nominal. Once again pricing wars will happen once someone wants more than their shopping cart served over SSL. The problem today is demand is minimal, but those who need it must have it. Therefore price gouging is the norm.

Seriously, we need to do this?

Yes, seriously. HTTPS is the right direction for the Internet. There’s valid arguments for not switching your site over today, but those roadblocks will disappear and you should be re-evaluating where you stand periodically. I’ve moved a few sites including this blog (SPDY for now, HTTP/2 soon) to experience what would happen. It was largely a smooth transition. I’ve got some sites still on HTTP. Some will be on HTTP for the foreseeable future due to other circumstances, others will switch sooner. This doesn’t mean HTTP is dead tomorrow, or next year. It just means the future of the internet is HTTPS, and you should be part of it.