About a year ago I asked where the async Google AdSense was. It finally arrived, and you need to do nothing to gain the performance. Awesome!

Category: Web Development

My passion

I’d like to challenge all browser vendors to put together a comprehensive JS API for encryption. I’ll use this blog post to prove why it’s necessary and would be a great move to do so.

The Ultimate Security Model

I consider Mozilla Sync (formerly known as “Weave”) to have the ultimate security model. As a brief background, Mozilla Sync is a service that synchronizes your bookmarks, browsing history, etc. between computers using “the cloud”. Obviously this has privacy implications. The solution basically works as follows:

- Your data is created on your computer (obviously).

- Your data is encrypted on your computer.

- Your data is transmitted securely to servers in an encrypted state.

- Your data is retrieved and decrypted on your computer.

The only one who can ever decrypt your data is you. It’s the ultimate security model. The data on the server is encrypted and the server has no way to decrypt it. A typical web service works like this:

- Your data is created on your computer.

- Your data is transmitted securely to servers.

- Your data is transmitted securely back to you.

The whole time it’s on the remote servers, it could in theory be retrieved by criminals, nosy sysadmins, governments, etc. There are times when you want a server to read your data to do something useful, but there are times where it shouldn’t.

The Rise Of Cloud Data And HTML5

It’s no secret that more people are moving more of their data in to what sales people call “the cloud” (Gmail, Dropbox, Remember The Milk, etc). More and more of people’s data is out there in this maze of computers. I don’t need to dwell too much about the issues raised by personal data being stored in places where 4th amendment rights aren’t exactly clear in the US and may not exist in other locales. It’s been written about enough in the industry.

Additionally newer features like Web Storage allow for 5-10 MB of storage on the client side for data, often used for “offline” versions of a site. This is really handy but makes any computer or cell phone used a potentially treasure trove of data if that’s not correctly purged or protected. I expect that 5-10 MB barrier to rise over time just like disk cache. Even my cell phone can likely afford more than 5-10 MB. My digital camera can hold 16 GB in a card a little larger than my fingernail. Local storage is already pretty cheap these days, and will likely only get cheaper.

Mobile phones are hardly immune from all this as they feature increasingly robust browsers capable of all sorts of HTML5 magic. The rise of mobile “apps” is powered largely by the offline abilities and storage functionality. Web Storage facilitates this in many ways but doesn’t provide any inherent security.

Again, I don’t need to dwell here, but people are leaving increasingly sensitive data on devices they use, and services they use. SSL protects them while data is moving over the wire, but does nothing for them once data gets to either end. The time spent over the wire is measured in milliseconds, the time spent at either end can be measured in years.

Enter JS Crypto

My proposal is that there’s a need for native JS Cryptography implementing several popular algorithms like AES, Serpent, Twofish, MD5 (I know it’s busted, but still could be handy for legacy reasons), SHA-256 and expanding as cryptography matures. By doing so, the front end logic can easily and quickly encrypt data before storing or sending.

For example to protect Web Storage before actually saving to globalStorage:

Using xmlHttpRequest or POST/GET one could send encrypted payloads directly to the server over http or https rather than send raw data to the server. This greatly facilitates the Mozilla Sync model of data security.

This can also be an interesting way to transmit select data in a secure manner while serving the rest of a site over http using xmlHttpRequest by just wrapping the data in crypto (that assumes a shared key).

I’m sure there are other uses that I haven’t even thought of.

Performance

JS libraries like Crypto-JS are pretty cool, but they aren’t ideal. We need something as fast and powerful as we can get. Like I said earlier, mobile is a big deal here and mobile has performance and power issues. Intel and AMD now have AES Native Instructions (AES NI) for their desktop chips. I suspect mobile chips who don’t have this will eventually do so. I don’t think any amount of JS optimization will get that far performance wise. We’re talking 5-10 MB of client side data today, and that will only grow. We’re not even talking about encrypting data before remote storage (which in theory can break the 10MB limit).

Furthermore, most browsers already have a Swiss Army knife of crypto support already, just not exposed via JS in a nice friendly API. I don’t think any are currently using AES NI when available, though that’s a pretty new feature and I’m sure in time someone will investigate that.

Providing a cryptography API would be a great way to encourage websites to up the security model in an HTML5 world.

Wait a second…

Shouldn’t browsers just encrypt Web Storage, or let OS vendors turn on Full Disk Encryption (FDE)?

Sure, both are great, but web apps should be in control of their own security model regardless of what the terminal is doing. Even if they are encrypted, that doesn’t provide a great security model if the browser has one security model in place for Web Storage and the site has its own authentication system.

Don’t JS Libraries already exist, and isn’t JS getting the point of almost being native?

True, libraries do exist, and JS is getting amazingly fast to the point of threatening native code. However crypto is now being hardware accelerated. It’s also something that can be grossly simplified by getting rid of libraries. I view JS crypto libraries the way I view ExplorerCanvas. Great, but I’d prefer a native implementation for its performance. These libraries do still have a place bridging support for browsers that don’t have native support in the form of a shim.

But if data is encrypted before sending to a server, the server can’t do anything with it

That’s the point! This isn’t ideal in all cases for example you can’t encrypt photos you intend to share on Facebook or Flickr, but a DropBox like service may be an ideal candidate for encryption.

What about export laws?

What about them? Browsers have been shipping cryptography for years. This is just exposing cryptography so web developers can better take advantage and secure user data. If anything JS crypto implementations likely create a bigger legal issue regarding “exporting” cryptography for web developers.

Your crazy!

Perhaps. To quote Apple’s Think Different Campaign

Here’s to the crazy ones. The misfits. The rebels. The troublemakers. The round pegs in the square holes.

The ones who see things differently. They’re not fond of rules. And they have no respect for the status quo. You can quote them, disagree with them, glorify or vilify them.

About the only thing you can’t do is ignore them. Because they change things. They invent. They imagine. They heal. They explore. They create. They inspire. They push the human race forward.

Maybe they have to be crazy.

How else can you stare at an empty canvas and see a work of art? Or sit in silence and hear a song that’s never been written? Or gaze at a red planet and see a laboratory on wheels?

While some see them as the crazy ones, we see genius. Because the people who are crazy enough to think they can change the world, are the ones who do.

Time to enable the crazy ones to do things in a more secure way.

Updated: Changed key to password to better reflect likely implementation in the psudocode.

I ran into a peculiar situation with a PHP web application that went from working for several years without incident to suddenly resulting in timeouts and spiking the load on my server. Some investigation traced it back to a seemingly benign and obscure change to PHP’s rand() implementation between 5.3.3 and 5.3.4.

To summarize several hundred lines of code: it gets a value from an array where the index is a random number between X and Y. X and Y are highly unpredictable by nature of the application. It keeps trying with different values until something is returned. Something like:

See it? If you don’t, you shouldn’t feel bad, I didn’t see it initially either.

Prior to PHP 5.3.4 mt_/rand did not check if the max is greater than the min. This has changed as a result of bug 46587. That 4 line change made an impact.

Take this example code:

In PHP 5.3.3 you’d get:

$ php test.php right: 3 wrong: 4 $ php test.php right: 2 wrong: 5

Despite the incorrect order of max/min it actually worked just fine. It had done so at least since PHP 4.3 (circa 2003) as far as I’m aware.

In PHP 5.3.4:

$ php test.php right: 2 PHP Warning: mt_rand(): max(1) is smaller than min(5) in /test/test.php on line 4 wrong:

As a result, this while(){} never terminated until the timeout was reached.

The solution is obviously trivial once you actually trace this bug back:

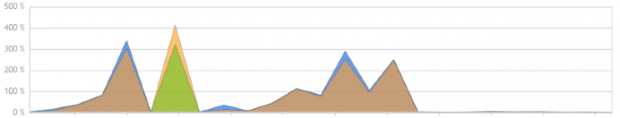

This resulted in several GB’s worth of warnings in my error log in a matter of hours. You can also see how it (the brown area) dropped off once the fix was deployed as measured by % of wall clock time:

It’s the little things sometimes that cause all the trouble.

Enterprise CSS/JS/HTML

About HTML5 Boilerplate

I wanted to take a few minutes to discuss HTML5 Boilerplate, a template that’s rapidly going around the web development community. I’ve had a few email threads and chats about this recently and thought I’d just put all my thoughts together in one place now.

I’ll start by saying it’s not a bad template. It’s actually quite good and encompasses many best practices as well as incorporates fixes for many common problems (clearfix, pngfix). What I’d like to make note of is that it’s not really bringing you HTML5 and lots of what it does has nothing to do with HTML5.

Not Really HTML5

For starters you’re not really getting HTML5. HTML5 Boilerplate uses JavaScript library called Modernizr. As their website explains:

Modernizr does not add missing functionality to browsers; instead, it detects native availability of features and offers you a way to maintain a fine level of control over your site regardless of a browser’s capabilities.

It also lets you apply styles to the new semantic HTML5 elements like <header/>, <footer/> <section/>.

What don’t you get? Well for starters you’re missing <canvas/> and <video/>. Other than tag elements you’re also missing things like Gelocation, Drag&Drop, web storage, MathML, async attribute on <script/> to name a few. SVG?

Pretty much all the headliners in the HTML5 spec aren’t included. Some like <canvas/> could be helped by way of explorercanvas, but that’s not in there by default.

HTML5 Boilerplate also makes reference to things like Access-Control which still doesn’t work in older browsers. They also suggest setting mimetypes for HTML5 video. This isn’t by any means bad, but hardly makes <video/> useful to everyone. Browsers are still pretty fragmented between webm, ogg, and h.264 (mp4). Then you have older versions that support none of these.

Using gzip on ttf,otf,eot files seems to be a good idea. WOFF however are compressed and correctly excluded.

WTF Does This Have To Do With HTML5?

There are lots of things that I would consider best-practices, but would hardly consider to be HTML5. For example pngfix for IE6, .clearfix, apple-touch-icon, console.log wrapper being the most obvious.

Setting far-future cache times are good, and disabling Etag is a good idea, assuming you rename the file every time it updates. But what does this have to do with HTML5? Is this even practical for everyone?

Then there is some interesting css work like inline print block. There are also a couple of nice usability fixes that I like. Regardless, they are just good design and UX. Not HTML5.

As for the graceful degradation and mobile optimization… that’s design and css. There is no reason why any HTML4 or XHTML site couldn’t do that today. Most choose not to do so in favor of serving different content (including ads) to different devices.

Options -MultiViews… grumble. I’m not particularly fond of it, but again if it works for you, I wouldn’t push you away from it.

Removing www… I hate this one. In my experience the only people who insist upon this have never dealt with websites with high volume and had the requirement of using a CDN by way of a CNAME in front of your site (your domain must be an A record). What is the real benefit here other than some sort of URL ascetics? I’ll let you in on a little secret: The IP address for this blog is hardly maximizing feng shui.

Best Practices != HTML5

Many of these things are best practices. Some of these depend on your application. Most of these aren’t HTML5.

Lets just clarify that HTML5 is not about disabling MultiViews getting rid of the Etag header and being able to style <section/> elements. HTML5 is more than that.

Your ability to use HTML5 still depends on widespread adoption of modern browsers like the latest and upcoming versions of Firefox, Chrome, Safari, Opera, and even IE 9.

Again, HTML5 Boilerplate is not a bad starting point. My point is you’re not really getting as much as it initially sounds like. It’s a bunch of fixes you likely already have in your toolkit already assembled. If that’s helpful: great. But don’t think your missing out on a new era of the Internet by not adopting it. Most good web developers have done these things for a long time now.

Several months ago I was looking for a good way to monitor not just my server, but the actual services on the server. Just responding to a ping doesn’t mean everything is OK. As the old saying goes “if you can’t find it, build it”. The result of this is a little project called It’s All Good.

At its core it’s a light framework for checking various aspects of a server and deciding if things are operating within defined parameters or not. So far it has “out of the box” support for:

- CPU Load – As simple as it sounds. Check that your CPU load doesn’t exceed a threshold you define.

- Disk Usage – Sets off an alarm when your server is running low on disk space.

- SMTP Ping – This makes a connection to your SMTP server to check that it’s online and operational.

- MySQL Check – Checks to see if it can make a successful connection to a MySQL server.

- HTTP(s) Check – This can connect to a HTTP or HTTPS server and check that it connected successfully as well as check that for a condition on the page. This is handy to make sure a web app is up and running or that your SSL cert isn’t expired.

Like I said, it’s just a framework, so adding other checks are relatively easy. There’s lots more I want to include (memory, disk IO, process monitor for example). It’s designed to monitor the host, not a series of servers (though technically doable). This isn’t Nagios, it’s a way to get a quick glance at your key services on a host.

On its own it doesn’t send any notifications. It’s designed to be combined with the keyword monitoring feature of services like Pingdom, Monitis, Host-Tracker, SiteUptime, or Howsthe.com among others. This way you not only check services, but the server itself. If anything fails, you will be notified by your monitoring provider.

It’s All Good also has a UI for an admin to view which can give you the status and a basic rundown of its polling data. It’s also designed to so that it’s pretty easy to read on mobile devices like the iPhone, making it a great dashboard for on the go.

Lastly it’s designed to be pretty light and quick, so unless you are monitoring a ton of things on your server, it shouldn’t have any real overhead.

So far I’ve only implemented real support for the checks for Linux. I suspect most will work on BSD, and Darwin (though not all). Windows still needs some help. Patches are welcome. I’d also like to support things like IP whitelist/blacklists (automated via RSS fetches), and lots of modules to extend what it can keep track of.

Licensed GPL v2.

Google Pac-Man Hacking

Quick Hack

Here’s a literally 2 minute hack for the Google Pac-Man tribute on the homepage right now to put your own face on Pac-Man (pardon my poor photoshopping):

To try it add the following bookmarklet to your browser by dragging the link below to your bookmark bar:

raccettura-ize pacman

Now go to Google and press “Insert Coin” to play the game. Once the game loads, run the bookmarklet by clicking on it.

Hack yourself into Pac-Man

Want to make your own? You likely have better photoshop skills than me. Download this image and replace the Pac-Man (and optionally Ms. Pac-Man) images with ones of your own keeping the position and sizes the same. Save as a PNG with transparency. Then upload somewhere. Now make a bookmarklet that looks like this (replacing URL_TO_YOUR_IMAGE with the url of your image):

javascript:(function(){document.getElementById('actor0').style['backgroundImage']="url('URL_TO_YOUR_IMAGE')";})()

Now share with your friends.

Permanent Home

Google has now removed Pac-Man from the homepage but it can still be found here.

Edit [5/24/2010 @ 8:45 PM EST]: Added “Permanent Home”.

I ran across the problem recently trying to write to a users wall using the Facebook API. The Facebook documentation is hardly sane as it’s a mix of languages, not entirely up to date, and lacks good examples. The error messages are hardly ideal either. “A session key is required” at least leads me in the right direction. “Invalid parameter” is just unacceptable and makes me stabby.

So here’s some cleaned up pseudocode I pulled together that will hopefully be of use to others who bang their heads against the wall. This “works for me” in my limited testing over several days:

Getting the right permissions is key.

The thing that ends up being the most confusing is the session_key. After reading the docs, I was inclined to do:

What you really want is:

You can also use adapt this to use stream.publish if you’d like.

Making Websites Faster

I’ve always been somewhat of a fan of minimalism when it comes to websites. The way I figure it:

Simpler + faster = better

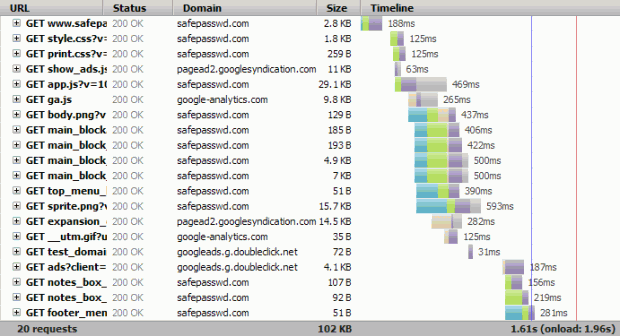

Lately I’ve become slightly obsessed with seeing how much I can tweak a website to perform faster. In this case it’s my password generator SafePasswd.com, which I built in 2006 and overhauled in 2009. It’s always been somewhat of a playground for me to try things a little different.

The old site circa 2006 took about 5 seconds to load. It was sometimes a little more if the server was under load. After an overhaul I got down to about 3.5 seconds. A solid and respectable improvement considering the new version is way better.

After my latest round of optimization with a clear cache, I’m down to 1.61 seconds, and I think I can get it slightly lower. Google Page Speed score is now a 95 and YSlow is B (83) or A (95) for v2 and v2 small site respectively.

Most of the improvements were relatively small and simple. A few required some backend changes to make it all work from a technical perspective. Even with a fairly image-centric design it’s possible to get pretty decent performance.

Anyone who cares about website performance comes to the quick realization that ads are a huge slow down. They are also the source of income for many websites. I somewhat casually mentioned back in December that Google was beta testing an async Google Analytics call. I found a bug in the early version but since updated it works extremely well and is non-blocking. It’s rather awesome.

Google AdSense like most ad networks is still a major slowdown. Often ads are implemented via a script which itself can include several other resources (including other JavaScript). Because it’s just a <script/> it’s blocking. It would be nice if AdSense would have an asynchronous version that lets you specify an element where it could insert the ad when loaded by passing an element object or id. It could then be non-blocking and speed up a large number of websites thanks to its popularity.

I can’t find any reason this wouldn’t technically be possible. It would make setup slightly more complicated, but the payoff for those who choose this implementation would be a faster website. Perhaps Steve Souders could poke the AdSense folks for a faster solution.

I’m also still hoping for SSL support. Another place where Google Analytics is ahead.