I’ve seen various people complain about performance problems when using services like Google’s DNS or OpenDNS. The reason why people generally see these problems is because many large websites live behind Content Distribution Networks (known as a CDN) to serve at least part of their content, or even their entire site. You’re getting a sub-optimal response and your connection is slower than needed.

I’ve worked on large websites and setup some websites from DNS to HTML. As a result I’ve got some experience in this realm.

How DNS Works

To understand why this is, you first need to know how DNS works. When you connect to any site, your computer first makes a DNS query to get an IP address for the server(s) that will give the content you requested. For example, to connect to this blog, you’re computer asks your ISP’s DNS servers for robert.accettura.com and it gets an IP back. Your ISP’s DNS either has this information cached from a previous request, or it asks the websites DNS what IP to use, then relays the information back to you.

This looks something like this schematically:

[You] --DNS query--> [ISP DNS] --DNS query--> [Website DNS] --response--> [ISP DNS] --response--> [You]

Next your computer contacts that IP and requests the web page you wanted. The server then gives your computer the requested content. That looks something like this:

[You] --http request--> [Web Server] --response--> [You]

That’s how DNS works, and how you get a basic web page.

How a CDN Works

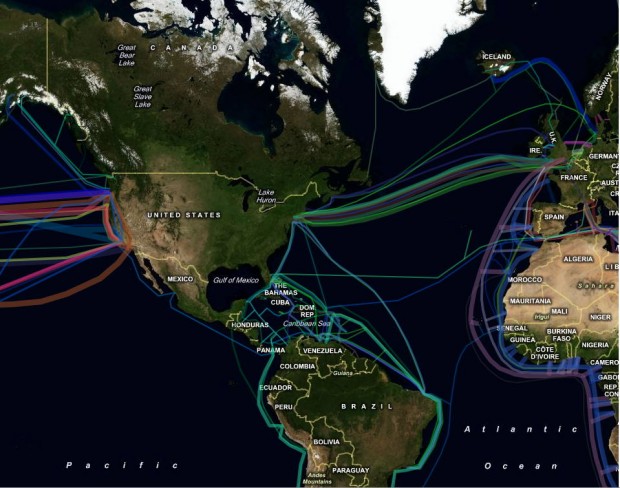

Now when you’re website gets large enough, you may have servers in multiple data centers around the world, or contract with a service provider who has these servers for you (most contract). This is called a content distribution network (CDN). Parts of, or your entire website may be hosted with a CDN. The idea is that if you put servers close to your users, they will get content faster.

Say the user is in New York, and the server is in Los Angeles. You’re connection may look something like this:

New York : 12.565 ms 10.199 ms

San Jose: 98.288 ms 96.759 ms 90.799 ms

Los Angeles: 88.498 ms 92.070 ms 90.940 ms

Now if the user is in New York and the server is in New York:

New York: 21.094 ms 20.573 ms 19.779 ms

New York: 19.294 ms 16.810 ms 24.608 ms

In both cases I’m paraphrasing a real traceroute for simplicity. As you can see, keeping the traffic in New York vs going across the country is faster since it reduces latency. That’s just in the US. Imagine someone in Europe or Asia. The difference can be large.

The way this happens is a company using a CDN generally sets up a CNAME entry in their DNS records to point to their CDN. Think of a CNAME as an alias that points to another DNS record. For example Facebook hosts their images and other static content on static.ak.facebook.com. static.ak.facebook.com is a CNAME to static.ak.facebook.com.edgesuite.net. (the period at the end is normal). We’ll use this as an example from here on out…

This makes your computer do an extra DNS query, which ironically slows things down! However in theory we make up the time and then some as illustrated earlier by using a closer server. When your computer sees the record is a CNAME it does another query to get an IP for the CNAME’s value. The end result is something like this:

$ host static.ak.facebook.com

static.ak.facebook.com is an alias for static.ak.facebook.com.edgesuite.net.

static.ak.facebook.com.edgesuite.net is an alias for a749.g.akamai.net.

a749.g.akamai.net has address 64.208.248.243

a749.g.akamai.net has address 64.208.248.208

That last query is going to the CDN’s DNS instead of the website. The CDN gives an IP (sometimes multiple) that it feels is closest to whomever is requesting it (the DNS server). That’s the important takeaway from this crash course in DNS. The CDN only sees the DNS server of the requester, not the requester itself. It therefore gives an IP that it thinks is closest based on the DNS server making the query.

The use of a CNAME is why many large websites will 301 you to from foo.com to www.foo.com. foo.com must be an A record. To keep you behind the CDN they 301.

Now lets see it in action!

Here’s what a request from NJ for an IP for static.ak.facebook.com looks like:

$ host static.ak.facebook.com

static.ak.facebook.com is an alias for static.ak.facebook.com.edgesuite.net.

static.ak.facebook.com.edgesuite.net is an alias for a749.g.akamai.net.

a749.g.akamai.net has address 64.208.248.243

a749.g.akamai.net has address 64.208.248.208

Now lets trace the connection to one of these responses:

$ traceroute static.ak.facebook.com

traceroute: Warning: static.ak.facebook.com has multiple addresses; using 64.208.248.243

traceroute to a749.g.akamai.net (64.208.248.243), 64 hops max, 52 byte packets

1 192.168.x.x (192.168.x.x) 1.339 ms 1.103 ms 0.975 ms

2 c-xxx-xxx-xxx-xxx.hsd1.nj.comcast.net (xxx.xxx.xxx.xxx) 25.431 ms 19.178 ms 22.067 ms

3 xe-2-1-0-0-sur01.ebrunswick.nj.panjde.comcast.net (68.87.214.185) 9.962 ms 8.674 ms 10.060 ms

4 xe-3-1-2-0-ar03.plainfield.nj.panjde.comcast.net (68.85.62.49) 10.208 ms 8.809 ms 10.566 ms

5 68.86.95.177 (68.86.95.177) 13.796 ms

68.86.95.173 (68.86.95.173) 12.361 ms 10.774 ms

6 tengigabitethernet1-4.ar5.nyc1.gblx.net (64.208.222.57) 18.711 ms 18.620 ms 17.337 ms

7 64.208.248.243 (64.208.248.243) 55.652 ms 24.835 ms 17.277 ms

That’s only about 50 miles away and as low as 17ms latency. Not bad!

Now here’s the same query done from Texas:

$ host static.ak.facebook.com

static.ak.facebook.com is an alias for static.ak.facebook.com.edgesuite.net.

static.ak.facebook.com.edgesuite.net is an alias for a749.g.akamai.net.

a749.g.akamai.net has address 72.247.246.16

a749.g.akamai.net has address 72.247.246.19

Now lets trace the connection to one of these responses:

$ traceroute static.ak.facebook.com

traceroute to static.ak.facebook.com (63.97.123.59), 30 hops max, 40 byte packets

1 xxx.xxx.xxx.xxx (xxx.xxx.xxx.xxx) 2.737 ms 2.944 ms 3.188 ms

2 98.129.84.172 (98.129.84.172) 0.423 ms 0.446 ms 0.489 ms

3 98.129.84.177 (98.129.84.177) 0.429 ms 0.453 ms 0.461 ms

4 dal-edge-16.inet.qwest.net (205.171.62.41) 1.350 ms 1.346 ms 1.378 ms

5 * * *

6 63.146.27.126 (63.146.27.126) 47.582 ms 47.557 ms 47.504 ms

7 0.ae1.XL4.DFW7.ALTER.NET (152.63.96.86) 1.640 ms 1.730 ms 1.725 ms

8 TenGigE0-5-0-0.GW4.DFW13.ALTER.NET (152.63.97.197) 2.129 ms 1.976 ms TenGigE0-5-1-0.GW4.DFW13.ALTER.NET (152.63.101.62) 1.783 ms

9 (63.97.123.59) 1.450 ms 1.414 ms 1.615 ms

The response this time is from the same city and a mere 1.6 ms away!

For comparison www.facebook.com does not appear to be on a CDN, Facebook serves this content directly off of their servers (which are in a few data centers). From NJ the ping time averages 101.576 ms, and from Texas 47.884 ms. That’s a huge difference.

Since www.facebook.com hosts pages specifically outputted for the user, putting them through a CDN would be pointless since the CDN would have to go to Facebooks servers for every request. For things like images and stylesheets a CDN can cache them at each node.

Wrapping It Up

Now the reason why using a DNS service like Google’s DNS or OpenDNS will slow you down is that while a DNS query may be quick, you may no longer be using the closest servers a CDN can give you. You generally only make a few DNS queries per pageview, but may make a dozen or so requests for different assets that compose a page. In cases where a website is behind a CDN, I’m not sure that using even a faster DNS service will ever payoff. For smaller sites, it obviously would since this variable is removed from the equation.

There are a few proposals floating around out there to resolve this limitation in DNS, but at this point there’s nothing in place.